About FATE

How can we balance the inherent bias found in search engines and achieve a sense of fair representation while effectively maintaining a high degree of utility? We are working on addressing inherent bias found in search engine results and recommendation systems.

System-focused Work

Experiments with Google as well as the New York Times datasets show that top web search results from search engines are topically biased. Search and recommendation systems are shown to be highly biased, lack accountability and transparency. We are going beyond understanding the causes of this bias and finding ways to de-bias information presented to the end-user.

Our simulations with these datasets have been reported in several publications. These system-focused works also include a new theoretical framework for modeling bias and relevance in a search system.

User-focused Work

✓ Investigate how users perceive search results from the perspective of bias and fairness.

✓ Observe users’ awareness of the presence of bias in search results.

✓ Analyze how users react to search and recommendation results with increased diversity.

✓ Design algorithms to reduce bias and increase fairness in search and recommendation systems.

Current Experiments

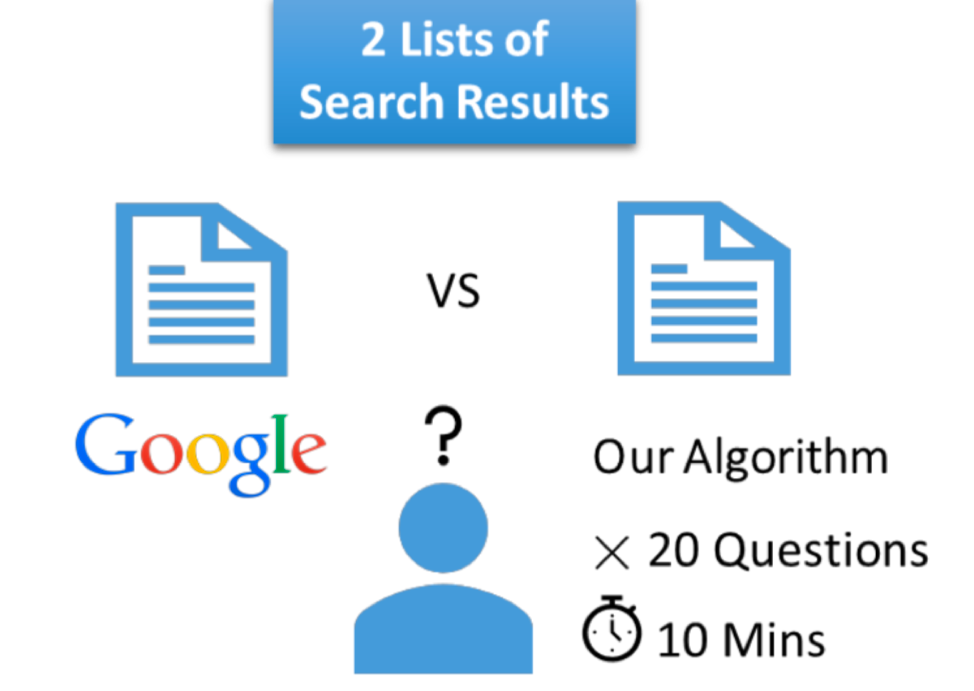

✓ Recruited participants through Mechnical Turk as well as through open calls.

✓ English speakers in the US only.

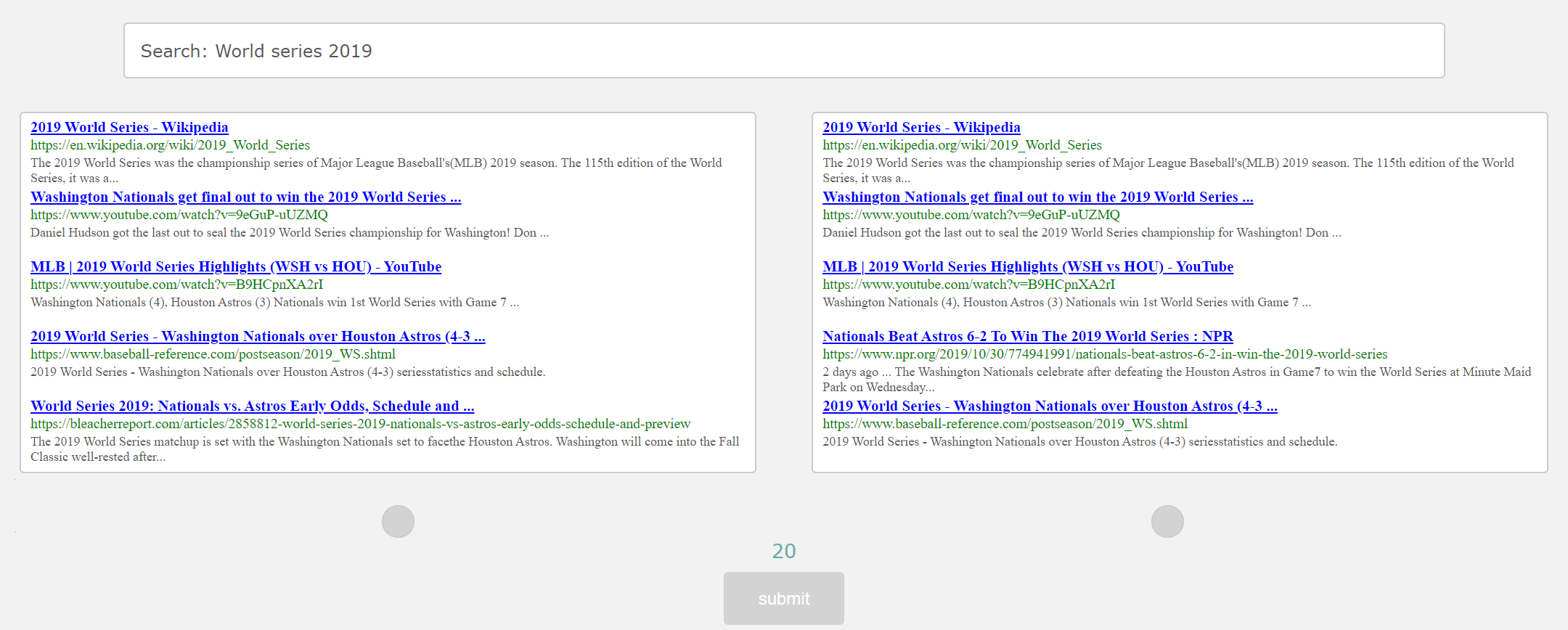

✓ Asking them to pick a rank list of results for a search query. One of these lists may be from Google, and the other one modified based on one of our diversity/fairness increasing algorithm.

User Study Components

An example of the questionnaire